IA Summit 12: Making Sense of the Data

The second and final workshop I attended at the IA Summit this year was Making Sense of the Data by Dana Chisnell. This was a interactive workshop reviewing ways to gather and synthesize customer feedback. This was a 1/2 day workshop and started with an exercise using the KJ Analysis method followed by some analysis techniques and concluding with a Character Erector Set exercise.

KJ Analysis

To start off the workshop Dana posed the following question.

“What obstacles do teams face in delivering the best possible user experiences?”

She then walked the room through a KJ Analysis in order to figure out what the best possible answer to the question was, for our group. The KJ Analysis is essentially a Affinity Diagramming for those of you more familiar with that term. When I asked her what the difference was she noted that the KJ prioritizes the groups down at the end to create specific voted results, whereas the Affinity Diagram is used simply to create the groupings. The KJ technique was originated in the 1960’s and got its name from its creator Jiro Kawakita.

KJ, or Affinity Diagraming is useful in that you can brainstorm abstract data and organize it into concise groupings or categories from which decisions can be made. It involves 8 steps and is done in silence with no discussion until the end:

- Create a focus question for participants to answer.

- Organize the right group of people into a room to help answer the question.

- Everyone writes their thoughts and answers in simple terms, one per note, on sticky note.

- As a group, everyone puts their sticky notes at random up on the wall.

- As a group, everyone is tasked with moving similar sticky notes towards each other until grouping emerge.

- Using a different color post-it, each person names each grouping.

- Using a mark such as a star, each person votes on the groups they find most important to the focus question in priority, such as 1 star for least important, 2 for next, and 3 stars for most important.

- The stars are counted up for each group, creating the answers to the question, in priority.

The entire exercise takes about an hour and is a quick democratic way to prioritize ideas.

Rolling Issues List

The next item she reviewed was the rolling issues list, or what I often refer to as the Daily Log. At the end of a group of usability testing sessions, such as at the end of the day or after a specified break, all observers and the facilitator get together in a room and note their key observations and for which participants those observations applied. This list is built on throughout the remainder of the testing in the same way, thereby creating a set of quick high-level results to share at the end of the testing prior to the full analysis being completed. It also creates an opportunity for observers to take part in issue identification and talk about what they saw.

Observation to Direction

The next method she covered was about moving from observations to inferences to create a direction. It starts with marking down your observations from a variety of research about the interface. She classified observations as what you saw, and what you heard. Next you track your inferences about those observations, or why a participant did or said those particular things. She classified inferences as judgements, conclusions, guesses, and intuition. Finally you take your inferences and you conclude a direction, or solution. The direction is your evidence for design change, or theory about the fix.

Dana recommended creating a Observation to Direction worksheet where in a matrix format each column represents a different experience, and each row covers the Observation, Inference, and Direction.

Character Erector

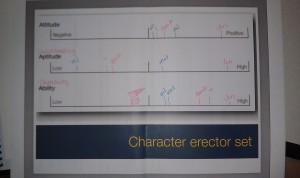

The Character Erector, or later dubbed Character Creator exercise was used to evaluate individuals, or archetypes based on Attitude, Aptitude, and Ability.

- Attitude – risk tolerance, motivations, persistence.

- Aptitude – knowledge, ability, expertise

- Ability – physical and cognitive constraints or attributes

To complete the exercise we thought of 3 people we each knew, and interviewed another member of the workshop about their 3 people based on the the Character Erector questions provided. These were 9 questions that asked about the person in general, how likely they were to try and solve a problem on their own, their tech ability and vocabulary, and possible age range.

We then marked where we thought each of the three people fell on a scale from low to high for each attribute, Attitude, Aptitude and Ability.

Upon completing the exercise, I noticed my group had a lot of trouble identifying with the term Aptitude, so we modified it to Understanding. Additionally we changed Ability, to Capability. We found this helped us to associate better with what each term was trying to judge. Attitude, Understanding, and Capability.

The goal of the Character Erector then was that you could make inferences about the content, functionality, and IA of the application or site for these characters based on a particular task or goal and your knowledge of their ability from this scale. Somewhat similar to a persona, this will give you the ability to understand what strengths or weakness the character will have to consider when approaching a task.

Images & Slides

The slides can be downloaded from this pdf link

I also took some pictures of the session and my notes which can be seen below:

Thanks for taking the time to compile all your notes. This was a great session!

It was nice to meet you,

Laura

Definitely, It was great to meet you too! 🙂